Uncertainty Theory #22

On listening in human pre-existence and sound or holophonic immersion. Part I.

Hearing develops quite early in the life of the fetus. Recent studies suggest that the fetus responds to sound at about 16 weeks gestation or even as early as 12 weeks. The response to speech sounds seems to be closer to 24 weeks. What is evident is that as early as the beginning of the third trimester, the fetus learns much about its environment through sound. In particular, it pays attention to familiar voices and to the language it hears around it.

The fetus hears its mother's voice better than that of other people, since it receives it directly through its body in the form of vibrations, in addition to picking up with its ears the sounds that occur outside the uterus. These sounds are not distorted by the heartbeat or the rumbling of the mother's stomach, as these body sounds have a much lower pitch than the sounds of the human voice. In this way, she gets used to the people around her and selectively listens to the sounds that are familiar to her.

Our unconscious, says Gaston Bachelard, is spatial and architectural. It is also phonic. It is so before being a groove of mnemic perceptual-visual traces.

Plato said that before we are born, we already have an original music that resonates in us in relation to the matrix from which we come.

Heidegger spoke of the sound precomprehension associated with the nature of the matrix and which would be associated with the immemorial past.

Humans possess a personal biological sonar that tells them exactly where the sound they have received is coming from. Since the spaced positioning of our ears on opposite sides of our head provides us with an automatic spatial sensor.

Intensity level and arrival time

The values that the brain takes into account when positioning a sound stimulus physiologically speaking are: The intensity level and the time of arrival. And the specific interaural differences between these parameters, is what the brain uses to locate the exact position of a stimulus; (with an error of 3 to 4 degrees).

In the horizontal sound plane, called azimuth, X-axis, the Intensity (ILD) and Time (ILD) reference between the two ears determines the position of the stimulus, providing the localization in the horizontal plane. Any one of these factors alone can be determinative in creating the perception of horizontal localization. In this case the ears are working together, one is dependent on the other.

The skull and thorax are a type of "resonator", which provide an additional sound stimulus to the brain, as a comparative factor for the exact localization of the stimulus. We can check this by placing the index fingers in their respective ears, covering the entrance to the middle ear and we only hear our cranial resonance.

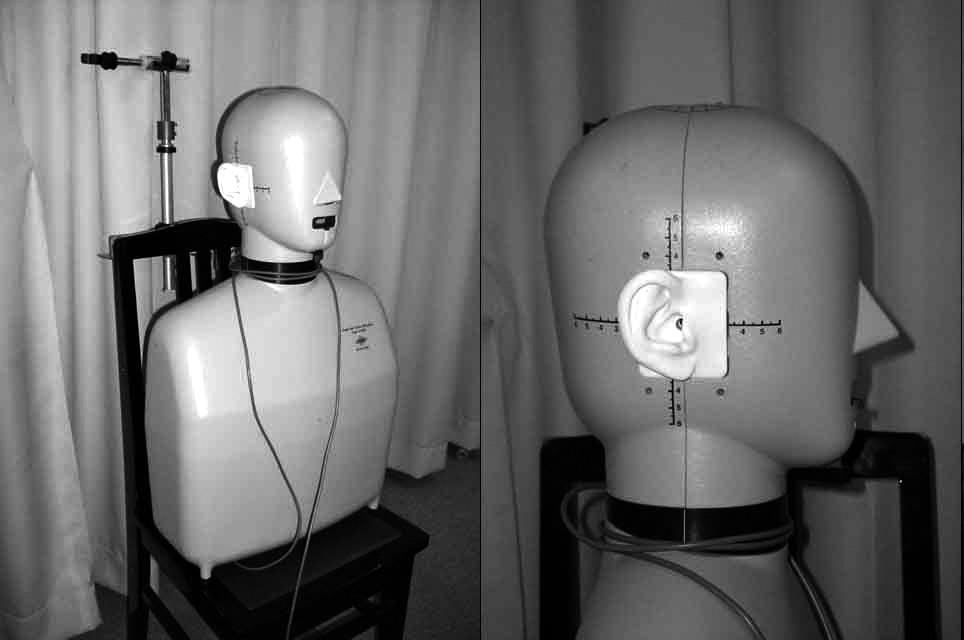

In the vertical plane, the zenith, Y-axis, plays a very important role in the external ear. It is responsible for conducting sounds into the ear canal. Here, spherical or omnidirectional waves are transformed into plane waves. And there, standing waves are generated, producing a filter for some frequencies. Thus changing the spectral component (the timbre). This tonicity is decoded in the brain by projecting the image on the vertical Y-axis. This process is known as head relative transfer function (H.R.T.F.). This is very, very interesting to see because this is the magic of 3D sound or immersive sound.

In this localization plane the ears work separately and do not make comparisons between them. They are totally independent of each other.

Another role the pinna plays is to provide front and rear location, Z-axis. The mechanism it uses is its own anatomical architecture which provides some directionality at high frequencies and adds another layer of H.R.T.F. to the equation. And obviously the shielded resistance of the anatomy of the ears also changes the tonality and intensity.

Within localization perception is what is known as "sound image distortion". Where the perceived spatial image differs from the intended sound source.

And other times the position of the stimulus becomes "disembodied", which, as in the case of airplanes (found reflections), it is very difficult to know its origin.

In 1951 a very interesting psychoacoustic effect was demonstrated, called "Law of Precedence" or better known as "Haas Effect". Demonstrating the importance of the time of arrival of two or more sounds to determine their precedence and therefore their origin to the sound stimulus.

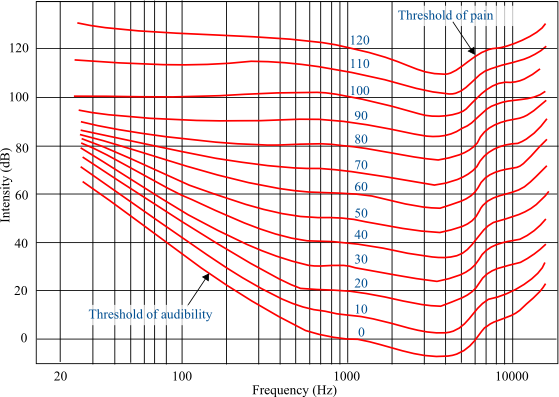

These investigations were fundamental for 3D technology, both the isophonic curves in relation to intensity and the law of precedence in relation to the arrival time of the sound stimulus.